Everything available in this article applies to OpenTofu as well.

Table of contents:

1. What is Terraform?

Once upon a time, if you worked in the IT industry, there was a big chance you faced different challenges when provisioning or managing infrastructure resources. It often felt like spinning plates, trying to keep everything running smoothly and making sure that all the resources are properly configured.

Then, Terraform came to the rescue and saved us from this daunting task that took a lot of time.

So what is Terraform? Terraform started as an open-source infrastructure as code (IaC) tool, developed by Hashicorp, that makes it easy to create and take care of your infrastructure resources. Now, it changed it license to BSL. If you want to learn more about the license change, and how it compares to OpenTofu checkout this article.

It’s built in Golang (Go), which gives it a lot of power to create different infrastructure pieces in parallel, making it reliable by taking advantage of Go’s strong type-checking and error-handling capabilities.

Terraform uses HCL (Hashicorp Configuration Language) code to define its resources, but even JSON can be used for this, if you, of course, hate your life for whatever reason. Let’s get back to HCL. It is a human-readable, high-level programming language that is designed to be easy to use and understand:

resource "resource_type" "resource_name" {

param1 = "value1"

param2 = "value2"

param3 = "value3"

param4 = "value4"

}

resource "cat" "british" {

color = "orange"

name = "Garfield"

age = 5

food_preference = ["Tuna", "Chicken", "Beef"]

}I don’t want to get into too much detail about what a resource is in this article, as I plan to build a series around this, but the above code, with a pseudo real-life example, is pretty much self-explanatory.

To make it as simple as possible for now to understand, when you are using HCL and declaring something, it will have a type (let’s suppose there is a resource type cat, for example) and a name on the first line. Inside the curly brackets, you are going to specify how you want to configure that type of “something”.

In our example, we will create a “cat”, that will be named inside of terraform as “british”. After that, we are configuring the cat’s real name, the one that everyone will know about, the color, the age, and what it likes to eat.

As you see, the language, at a first glance seems to be pretty close to English. There is more to it, of course, but you are going to see it in the next articles.

One of the main benefits of using Terraform is that it is platform-agnostic. This means that people that are coding in Terraform, don’t need to learn different programming languages to provision infrastructure resources in different cloud providers. However, this doesn’t mean that if you develop the code to provision a VM instance in AWS, you can use the same one for Azure or GCP.

Nevertheless, this can save a lot of time and effort, as engineers won’t need to constantly switch between a lot of tools (like going from Cloudformation for AWS to ARM templates for Azure).

A consistent experience is offered across all platforms.

Terraform is stateful. Is this a strength or is this a weakness? This topic is highly subjective and it depends on your use case.

One of the main benefits of statefulness in Terraform is that it allows it to make decisions about how to manage resources based on the current state of the infrastructure. This ensures that Terraform does not create unnecessary resources and helps to prevent errors and conflicts. This can save time and resources, make the provisioning process more efficient and also encourage collaboration between different teams.

Terraform keeps its state in a state file and uses it and your configuration, to determine the actions that need to be taken to reach the desired state of the resources.

Even though I’ve presented only strengths until now, being stateful has a relatively big weakness: the managing of the state file. This adds complexity to the system and also in the case that the state file gets corrupted or deleted, it can lead to conflicts and errors in the provisioning process.

We will tackle this subject in detail in the next articles.

You may now ask, how can I easily install it? Well, Hashicorp’s guide does a great job of helping you install it, just select your platform and you are good to go.

Ok, now you have a high-level idea about what Terraform is, how to install it, but it’s ok if you still have a lot of questions, as all the answers will come shortly.

Originally posted on Medium.

2. What is a Terraform provider?

In short, Terraform providers are plugins that allow Terraform to interact with specific infrastructure resources. They act as an interface between Terraform and the underlying infrastructure, translating the Terraform configuration into the appropriate API calls and allowing Terraform to manage resources across a wide variety of environments. Each provider has its own set of resources and data sources that can be managed and provisioned using Terraform.

One of the most common mistakes that people make when they are thinking about Terraform providers, is the fact that they assume that Terraform providers exist only for Cloud Vendors such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), or Oracle Cloud Infrastructure (OCI). There are a lot of other providers that can be used that don’t belong to a Cloud Vendor as Template, Kubernetes, Helm, Spacelift, Artifactory, VSphere, and Aviatrix, to name a few.

Each provider has its own set of resources and data sources that can be managed and provisioned using Terraform. For example, the AWS provider has resources for managing EC2 instances, EBS volumes, and ELB load balancers.

Another great thing related to this feature is the fact that you can build your own Terraform provider. While it has an API, you can translate it to Terraform, so that’s just great. However, there are thousands of providers already available in the registry, so you don’t need to reinvent the wheel.

Before jumping in and showing you examples of how to use providers, let’s discuss about how to use the documentation.

After you are selecting your provider from the registry, you will be redirected to the provider’s page.

In the above view, click on documentation:

Usually, the first tab you are directed to will explain how to configure and use the provider and some simple examples of how to create some simple resources. Of course, this view is different from one provider to another, but usually, you will see these examples.

We will get back to how to use the documentation when we will talk about resources in the next article.

Even though AWS is the biggest player in the market when it comes to cloud vendors, in this article I will show an example provider with OCI and one with Azure.

You can choose your own, of course, by going to the registry and selecting whatever suits you.

OCI

You have multiple ways to connect to the OCI provider as stated in the documentation, but I will only discuss about the default one:

API Key Authentication (default)

Based on your tenancy and your user will have to specify the following details:

tenancy_ocid

user_ocid

private_key or private_key_path

private_key_password (optional, only required if your password is encrypted)

fingerprint

region

provider "oci" {

tenancy_ocid = "tenancy_ocid"

user_ocid = "user_ocid"

fingerprint = "fingerprint"

private_key_path = "private_key_path"

region = "region"

}After you specify the correct values for all of the values mentioned above you can interact with your Oracle Cloud Infrastructure’s cloud account.

You will be able to define resources and datasources to create and configure different pieces of infrastructure.

AZURE

Similar to the OCI provider, the Azure provider, can be configured in multiple ways:

Authenticating to Azure using a Service Principal and a Client Certificate

Authenticating to Azure using a Service Principal and a Client Secret

I will discuss the Azure CLI option which is the easiest way to authenticate by leveraging the az login command.

provider "azurerm" {

features {}

}This is the only configuration you have to do in the Terraform code and after running az login and following the prompt you are good to go.

Originally posted on Medium.

3. What is a Terraform Resource?

Resources in Terraform refer to the components of infrastructure that Terraform is able to manage, such as virtual machines, virtual networks, DNS entries, pods, and others. Each resource is defined by a type, such as “aws_instance” or “google_dns_record”, “kubernetes_pod”, “oci_core_vcn”, and has a set of configurable properties, such as the instance size, vcn cidr, etc. Remember the cat example from the first article in this series.

Terraform can be used to create, update, and delete resources, managing dependencies between them and ensuring they are created in the correct order. You can also create explicit dependencies between some of the resources if you would like to do that by using depends_on

Let’s go in-depth and try to understand how to create these resources and how can we leverage the documentation.

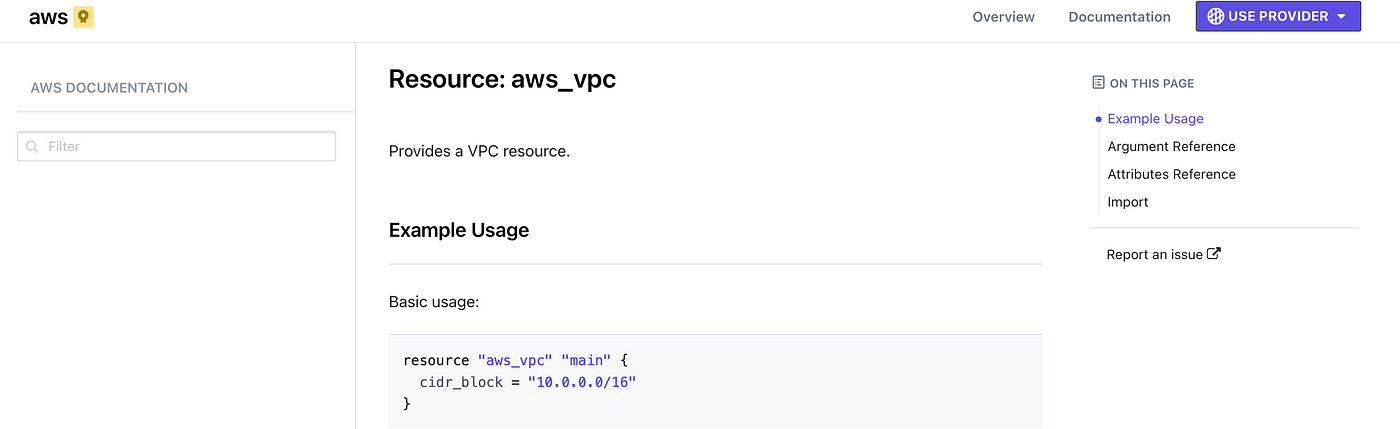

I will start with something simple, an aws_vpc

First things first, whenever you are creating a resource, you will need to go to the documentation. It is really important to understand what you can do for a particular resource and I believe that you should try to build a habit around this.

On the right-hand side, you have the On this page space, with 4 elements that you should know like you know to count:

Example Usage → this will show you a couple of examples of how to use the resource

Argument Reference → in this section you are going to see all the parameters that can be configured for a resource. Some parameters are mandatory and others are optional (these parameters will be signaled with an

Optionalplaced between brackets)Attributes Reference → here you will find out what a resource exposes and I will talk about this in more detail when I get to the Outputs article

Import → Until now, if you didn’t know anything about Terraform and you’ve just read my articles, you are possibly thinking that it’s possible to import different resources in the state and that is correct. In this section, you are going to find out how you can import that particular resource type

So let’s create a VPC. First, we need to define the provider as specified in the last article, the only caveat now, is that we are doing it for a different cloud provider. If you forgot how to do it just reference the previous article as it has two examples for Azure and OCI and you easily do it for AWS.

Nevertheless, I will show you an option for AWS too using the credentials file that is leveraged by aws cli also. The provider will automatically read the AWS_ACCESS_KEY and AWS_SECRET_ACCESS_KEY from the ~/.aws/credentials, so make sure you have that configured. An example can be found here.

We are then going to add the vpc configuration. Everything should be saved in a .tf file.

provider "aws" {

region = "us-east-1"

}

resource "aws_vpc" "example" {

cidr_block = "10.0.0.0/16"

}As we see in the documentation, all the parameters for the vpc are optional, as AWS will assign everything that you don’t specify for you.

Now it is time to run the code. For this, we are going to learn some terraform essential commands and I’m going just to touch upon the basics of these commands:

terraform init→ Initializes a working directory with Terraform configuration files. This should be the first command executed after creating a new Terraform configuration or cloning an existing one from version control. It also downloads the specified provider and modules if you are using any and saves them in a generated .terraform directory.terraform plan→ generates an execution plan, allowing you to preview the changes Terraform intends to make to your infrastructure.terraform apply→ executes the actions proposed in a Terraform plan. If you don’t provide it a plan file, it will generate an execution plan when you are running the command, as ifterraform planran. This prompts for your input so don’t be scared to run it.terraform destroy→ is a simple way to delete all remote objects managed by a specific Terraform configuration. This prompts for your input so don’t be scared to run it.

We will get into more details when we are going to tackle all Terraform commands during this series.

Ok, now that you know the basics, let’s run the code.

Go to the directory where you’ve created your terraform file with the above configuration and run terraform initInitializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Installing hashicorp/aws v4.50.0...

- Installed hashicorp/aws v4.50.0 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

After that, let’s run terraform plan to see what is going to happen:

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_vpc.example will be created

+ resource "aws_vpc" "example" {

+ arn = (known after apply)

+ cidr_block = "10.0.0.0/16"

+ default_network_acl_id = (known after apply)

+ default_route_table_id = (known after apply)

+ default_security_group_id = (known after apply)

+ dhcp_options_id = (known after apply)

+ enable_classiclink = (known after apply)

+ enable_classiclink_dns_support = (known after apply)

+ enable_dns_hostnames = (known after apply)

+ enable_dns_support = true

+ enable_network_address_usage_metrics = (known after apply)

+ id = (known after apply)

+ instance_tenancy = "default"

+ ipv6_association_id = (known after apply)

+ ipv6_cidr_block = (known after apply)

+ ipv6_cidr_block_network_border_group = (known after apply)

+ main_route_table_id = (known after apply)

+ owner_id = (known after apply)

+ tags_all = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

───────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now.

In the plan, we see that we are going to create one vpc with the above configuration. You can observe that the majority of the parameters will be known after apply, but the cidr block is the one that we’ve specified.

Let’s apply the code and create the vpc with terraform apply:

Terraform will perform the following actions:

# aws_vpc.example will be created

+ resource "aws_vpc" "example" {

+ arn = (known after apply)

+ cidr_block = "10.0.0.0/16"

+ default_network_acl_id = (known after apply)

+ default_route_table_id = (known after apply)

+ default_security_group_id = (known after apply)

+ dhcp_options_id = (known after apply)

+ enable_classiclink = (known after apply)

+ enable_classiclink_dns_support = (known after apply)

+ enable_dns_hostnames = (known after apply)

+ enable_dns_support = true

+ enable_network_address_usage_metrics = (known after apply)

+ id = (known after apply)

+ instance_tenancy = "default"

+ ipv6_association_id = (known after apply)

+ ipv6_cidr_block = (known after apply)

+ ipv6_cidr_block_network_border_group = (known after apply)

+ main_route_table_id = (known after apply)

+ owner_id = (known after apply)

+ tags_all = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:

Enter yes at the prompt and you will be good to go.

Enter a value: yes

aws_vpc.example: Creating...

aws_vpc.example: Creation complete after 3s [id=vpc-some_id]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Woohoo, we have created a vpc using Terraform. You can then go into the AWS console and see it in the region you’ve specified for your provider.

Very nice and easy, I would say, but before destroying the vpc, let’s see how we can create a resource that references this existing vpc.

An AWS internet gateway exists only inside of a vpc. So let’s go and check the documentation for the internet gateway.

From the documentation, we see in the example directly that we can reference a vpc id for creating the internet gateway:

resource "aws_internet_gateway" "gw" {

vpc_id = ""

}But the 100-point question is, how can we reference the above-created vpc? Well, that is not very hard if you remember the following:

type.name.attribute

All resources have a type, a name, and some attributes they expose. The exposed attributes are part of the Attributes reference section in the provider. Some providers will explicitly mention they are exposing everything from Argument reference + Attribute reference.

Let’s take our vpc as an example, its type is aws_vpc, its name is exampleand it exposes a bunch of things (remember, the documentation is your best friend).

So, as the internet gateway requires a vpc_id and we want to reference our existing one, our code will look like this in the end:

provider "aws" {

region = "us-east-1"

}

resource "aws_vpc" "example" {

cidr_block = "10.0.0.0/16"

}

resource "aws_internet_gateway" "gw" {

vpc_id = aws_vpc.example.id

}We can then reapply the code with terraform apply and terraform will simply compare what we have already created with what we have in our configuration and create only the internet gateway.

aws_vpc.example: Refreshing state... [id=vpc-some_id]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_internet_gateway.gw will be created

+ resource "aws_internet_gateway" "gw" {

+ arn = (known after apply)

+ id = (known after apply)

+ owner_id = (known after apply)

+ tags_all = (known after apply)

+ vpc_id = "vpc-some_id"

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

aws_internet_gateway.gw: Creating...

aws_internet_gateway.gw: Creation complete after 2s [id=igw-some_igw]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Pretty neat, right?

Once we are done with our infrastructure, we can destroy it, using terraform destroy

aws_vpc.example: Refreshing state... [id=vpc-some_vpc]

aws_internet_gateway.gw: Refreshing state... [id=igw-some_igw]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# aws_internet_gateway.gw will be destroyed

- resource "aws_internet_gateway" "gw" {

- all parameters are specified here

}

# aws_vpc.example will be destroyed

- resource "aws_vpc" "example" {

- all parameters are specified here

}

Plan: 0 to add, 0 to change, 2 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

aws_internet_gateway.gw: Destroying... [id=igw-some_igw]

aws_internet_gateway.gw: Destruction complete after 2s

aws_vpc.example: Destroying... [id=vpc-some_vpc]

aws_vpc.example: Destruction complete after 0s

Destroy complete! Resources: 2 destroyed.

It can be a little overwhelming, but bear with me and understand the key points:

A resource is a component that can be managed with Terraform (a VM, a Kubernetes Pod, etc)

Documentation is your best friend, understand how the use those 4 sections from it

There are 4 essential commands that help you provision and destroy your infrastructure: init/plan/apply/destroy

When referencing a resource from a configuration we are using

type.name.attribute

Originally posted on Medium.

4. Data Sources and Outputs

Terraform resources are great and you can do a bunch of stuff with them. But did I tell you can use Data Sources and Outputs in conjunction with them to better implement your use case? Let’s jump into it.

To put it as simply as possible, A data source is a configuration object that retrieves data from an external source and can be used in resources as arguments when they are created or updated. When I am talking about an external source, I am referring to absolutely anything: manually created infrastructure, resources created from other terraform configurations, and others.

Data sources are defined in their respective providers and you can use them with a special block called data. The documentation of a data source is pretty similar to one of a resource, so if you’ve mastered how to use that one, this will be a piece of cake.

Let’s take an example of a data source that returns the most recent ami_id (image id) of an Ubuntu image in AWS.

provider "aws" {

region = "us-east-1"

}

data "aws_ami" "ubuntu" {

filter {

name = "name"

values = ["ubuntu-*"]

}

most_recent = true

}

output "ubuntu" {

value = data.aws_ami.ubuntu.id

}I am putting a filter on the image name and I’m specifying all the image names that start with ubuntu-. I’m adding the most_recent = true to get only one image, as the aws_ami data source doesn’t support returning multiple image ids. So this data source will return only the most recent Ubuntu ami.

In the code example, there is also one reference to an output, but I haven’t exactly told you what an output is, did I?

An output is a way to easily view the value of a specific data source, resource, local, or variable after Terraform has finished applying changes to infrastructure. It can be defined in the Terraform configuration file and can be viewed using the terraform output command, but just to reiterate, only after a terraform apply happens. Outputs can be also used to expose different resources inside a module, but we will discuss this in another post.

Outputs don’t depend on a provider at all, they are a special kind of block that works independently from them.

The most important parameters an output supports are value, for which you specify what you want to see, and description (optional) in which you explain what that output wants to achieve.

Let’s go back to our example.

In the output, I have specified a reference to the above data source. When we are referencing a resource, we are using type.name.attribute, for data sources, it’s pretty much the same but we have to prefix it with data, so data.type.name.attribute will do the trick.

As I mentioned above, in order to see what this returns, you will first have to apply the code. You are not going to see the contents of a data source without an output, so I encourage you to use them at the beginning when you are trying to get familiar with them.

This is the output of a terraform apply:

data.aws_ami.ubuntu: Reading...

data.aws_ami.ubuntu: Read complete after 3s [id=ami-0f388924d43083179]

Changes to Outputs:

+ ubuntu = "ami-0f388924d43083179"

You can apply this plan to save these new output values to the Terraform state, without changing any real infrastructure.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

Outputs:

ubuntu = "ami-0f388924d43083179"

In an apply, the first state a resource goes through is creating, a data source is going through a reading state, just to make the differences between them clearer.

Now, let’s use this image to create an ec2 instance:

provider "aws" {

region = "us-east-1"

}

data "aws_ami" "ubuntu" {

filter {

name = "name"

values = ["ubuntu-*"]

}

most_recent = true

}

output "ubuntu" {

value = data.aws_ami.ubuntu.id

}

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = "t2.micro"

}Just by referencing the ami id from our data source and an instance type, we are able to create an ec2 instance with a terraform apply

Don’t forget to delete your infrastructure if you are practicing, as everything you create will incur costs. Do that with a terraform destroy.

Originally posted on Medium.

5. Terraform Variables and Locals

Terraform variables and locals are used to better organize your code, easily change it, and make your configuration reusable.

Before jumping into variables and locals in Terraform, let’s first discuss their supported types.

Usually, in any programming language, when we are defining a variable or a constant, we are assigning it, or it infers a type.

Supported types in Terraform:

Primitive:

String

Number

Bool

Complex — These types are created from other types:

List

Set

Map

Object

Null — Usually represents absence, really useful in conditional expressions.

There is also the any type, in which you basically add whatever you want without caring about the type, but I really don’t recommend it as it will make your code harder to maintain.

Variables

Every variable will be declared with a variable block and we will always use it with var.variable_name. Let’s see this in action:

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = var.instance_type

}

variable "instance_type" {

description = "Instance Type of the variable"

type = string

default = "t2.micro"

}I have declared a variable called instance_type and in it, I’ve added 3 fields, all of which are optional, but usually, it is a best practice to add these, or at least the type and description. Well, there are three other possible arguments (sensitive, validation, and nullable), but let’s not get too overwhelmed by this.

In the resource block above, I’m referencing the variable, with var.instance_type and due to the fact I’ve set the default value to t2.micro, my variable will get that particular value and I don’t need to do anything else. Cool, right?

Well, let’s suppose we are not providing any default value and we are not doing anything else and we run a terraform apply. As Terraform does not know the value of the variable, it will ask you to provide a value for it. Pretty neat, that means you can forget to assign it. This is not a best practice, though.

There are a couple of other ways you can assign values to variables. If you happen to specify a value for a variable in multiple ways, Terraform will use the last value it finds, by taking into consideration their precedence order. I’m going to present these to you now:

using a default → as in the example above, this will be overwritten by any other option

using a

terraform.tfvarsfile → this is a special file in which you can add values to your variables

instance_type = "t2.micro"using a

*.auto.tfvarsfile → similar to the terraform.tfvars file, but will take precedence over it. The variables' values will be declared in the same way. The “*” is a placeholder for any name you want to useusing

-varor-var-filewhen running terraform plan/apply/destroy. When you are using both of them in the same command, the value will be taken from the last option.

terraform apply -var="instance_type=t3.micro" -var-file="terraform.tfvars"→ This will take the value from the var file, but if we specify the -var option last, it will get the value from there.

Some other variables examples:

variable "my_number" {

description = "Number example"

type = number

default = 10

}

variable "my_bool" {

description = "Bool example"

type = bool

default = false

}

variable "my_list_of_strings" {

description = "List of strings example"

type = list(string)

default = ["string1", "string2", "string3"]

}

variable "my_map_of_strings" {

description = "Map of strings example"

type = map(string)

default = {

key1 = "value1"

key2 = "value2"

key3 = "value"

}

}

variable "my_object" {

description = "Object example"

type = object({

parameter1 = string

parameter2 = number

parameter3 = list(number)

})

default = {

parameter1 = "value"

parameter2 = 1

parameter3 = [1, 2, 3]

}

}

variable "my_map_of_objects" {

description = "Map(object) example"

type = map(object({

parameter1 = string

parameter2 = bool

parameter3 = map(string)

}))

default = {

elem1 = {

parameter1 = "value"

parameter2 = false

parameter3 = {

key1 = "value1"

}

}

elem2 = {

parameter1 = "another_value"

parameter2 = true

parameter3 = {

key2 = "value2"

}

}

}

}

variable "my_list_of_objects" {

description = "List(object) example"

type = list(object({

parameter1 = string

parameter2 = bool

}))

default = [

{

parameter1 = "value"

parameter2 = false

},

{

parameter1 = "value2"

parameter2 = true

}

]

}In the above example, there are two variables of a simple type (number and bool), and a couple of complex types. As the simple ones are pretty easy to understand, let’s jump into the others.

list(string) — in this variable, you can declare how many strings you want inside the list. You are going to access an instance of the list by using var.my_list_of_strings[index]. Keep in mind that lists start from 0. var.my_list_of_strings[1] will return string2.

map(string) — in this variable, you can declare how many key:value pairs you want. You are going to access an instance of the map by using var.my_map_of_strings[key] where key is on the left-hand side from the equal sign. var.my_map_of_strings["key3"] will return value

object({}) — inside of an object, you are declaring parameters as you see fit. You can have simple types inside of it and even complex types and you can declare as many as you want. You can consider an object to be a map having more explicit types defined for the keys. You are going to access instances of an object, by using the same logic as you would for a map.

map(object({})) — I’ve specified this complex build, because this is something I am using a lot inside of my code because it works well with for_each (don’t worry, we will talk about this in another post). You are going to access a property of an object in the map by using var.my_map_of_objects["key"]["parameter"] and if there are any other complex parameters defined you will have to go deeper. var.my_map_of_objects["elem1"]["parameter1"] will return value. var.my_map_of_objects["elem1"]["parameter3"]["key1"] will return value1.

list(object({})) — This is something I’m using in dynamic blocks(again, we will discuss this in detail in another post). You are going to access a property of an object in the list, by using var.my_list_of_objects[index]["parameter"]. Again, if there are any parameters that are complex, you will have to go deeper. var.my_list_of_objects[0]["parameter1"] will return value.

One important thing to note is the fact that you cannot reference other resources or data sources inside a variable, so you cannot say that a variable is equal to a resource attribute by using the type.name.attribute.

Locals

On the other hand, a local variable assigns a name to an expression, making it easier for you to reference it, without having to write that expression a gazillion times. They are defined in a locals block, and you can have multiple local variables defined in a single local block. Let’s take a look:

locals {

instance_type = "t2.micro"

most_recent = true

}

data "aws_ami" "ubuntu" {

filter {

name = "name"

values = ["ubuntu-*"]

}

most_recent = local.most_recent

}

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = local.instance_type

}As you see, we are defining inside the locals block two local variables and we are referencing them throughout our configuration with local.local_variable_name.

As opposed to variables, inside of a local, you can define whatever resource or data source attribute you want. We can even define more complex operations inside of them, but for now, let’s just let this sync in as we are going to experiment with these some more in the future.

Originally posted on Medium.

6. Terraform Provisioners and Null Resource

Terraform provisioners have nothing in common with providers. You can use provisioners to run different commands or scripts on your local machine or a remote machine, and also copy files from your local machine to a remote one. Provisioners, exist inside of a resource, so in order to use one, you will simply have to add a provisioner block in that particular resource.

One thing worth mentioning is the fact that a provisioner is not able to reference the parent resource by its name, but they can use the self object which actually represents that resource.

They are considered a last resort, as they are not a part of the Terraform declarative model.

There are 3 types of provisioners:

local-exec

file (should be used in conjunction with a connection block)

remote-exec (should be used in conjunction with a connection block)

All provisioners support two interesting options when and on_failure.

You can run provisioners either when the resource is created (which is, of course, the default option) or if your use case asks for it, run it when a resource is destroyed.

By default, on_failure is set to fail, which will fail the apply if the provisioner fails, which is expected Terraform behaviour, but you can set it to ignore a fail by setting it to continue.

From experience, I can tell you that sometimes provisioners fail for no reason, or they can even appear to be working and not doing what they are expected to. Still, I believe it is still very important to know how to use them, because, in some of your use cases, you may not have any alternatives.

Before jumping into each of the provisioners, let’s talk about null resources. A null resource is basically something that doesn’t create anything on its own, but you can use it to define provisioners blocks. They also have a “trigger” attribute, which can be used to recreate the resource, hence to rerun the provisioner block if the trigger is hit.

Local-Exec

As its name suggests, a local-exec block is going to run a script on your local machine. Nothing too fancy about it. Apart from the when and on_failure options, there are a couple of other options you can specify:

command — what to run; this is the only required argument.

working_dir — where to run it

interpreter — what interpreter to use (e.g /bin/bash), by default terraform will decide based on your system os

environment — key/value pairs that represent the environment

Let’s see this in action in a null resource and observe the output of a terraform apply

resource "null_resource" "this" {

provisioner "local-exec" {

command = "echo Hello World!"

}

}

# null_resource.this: Creating...

# null_resource.this: Provisioning with 'local-exec'...

# null_resource.this (local-exec): Executing: ["/bin/sh" "-c" "echo Hello World!"]

# null_resource.this (local-exec): Hello World!

# null_resource.this: Creation complete after 0s [id=someid]You can use this to run different scripts before or after an apply of a specific resource by using depends_on (we will talk about this in another article in more detail).

Connection Block

Before going into the other two provisioners, remote-exec and file, let’s take some time and understand the connection block. In order to run or copy something on a remote vm, you will first have to connect to it, right?

Connection blocks, support both ssh and winrm, so you can easily connect to both your Linux and Windows vms.

You even have the option to connect via a bastion host or a proxy, but I will just show you a simple connection block for a Linux VM.

connection {

type = "ssh"

user = "root"

private_key = "private_key_contents"

host = "host"

}File

The file provisioner is used to copy a file from your local vm to a remote vm. There are three arguments that are supported:

source (what file to copy)

content (the direct content to copy on the destination)

destination (where to put the file)

As mentioned before, file needs a connection block to make sure it works properly. Let’s see an example on an ec2 instance.

provider "aws" {

region = "us-east-1"

}

locals {

instance_type = "t2.micro"

most_recent = true

}

data "aws_ami" "ubuntu" {

filter {

name = "name"

values = ["ubuntu-*"]

}

most_recent = local.most_recent

}

resource "aws_key_pair" "this" {

key_name = "key"

public_key = file("~/.ssh/id_rsa.pub")

}

resource "aws_instance" "web" {

ami = data.aws_ami.ubuntu.id

instance_type = local.instance_type

key_name = aws_key_pair.this.key_name

}

resource "null_resource" "copy_file_on_vm" {

depends_on = [

aws_instance.web

]

connection {

type = "ssh"

user = "ubuntu"

private_key = file("~/.ssh/id_rsa")

host = aws_instance.web.public_dns

}

provisioner "file" {

source = "./file.yaml"

destination = "./file.yaml"

}

}

# null_resource.copy_file_on_vm: Creating...

# null_resource.copy_file_on_vm: Provisioning with 'file'...

# null_resource.copy_file_on_vm: Creation complete after 2s [id=someid]Remote-Exec

Remote-Exec is used to run a command or a script on a remote-vm.

It supports the following arguments:

inline → list of commands that should run on the vm

script → a script that runs on the vm

scripts → multiple scripts to run on the vm

You have to provide only one of the above arguments as they are not going to work together.

Similar to file, you will need to add a connection block.

resource "null_resource" "remote_exec" {

depends_on = [

aws_instance.web

]

connection {

type = "ssh"

user = "ubuntu"

private_key = file("~/.ssh/id_rsa")

host = aws_instance.web.public_dns

}

provisioner "remote-exec" {

inline = [

"mkdir dir1"

]

}

}

# null_resource.remote_exec: Creating...

# null_resource.remote_exec: Provisioning with 'remote-exec'...

# null_resource.remote_exec (remote-exec): Connecting to remote host via SSH...

# null_resource.remote_exec (remote-exec): Host: somehost

# null_resource.remote_exec (remote-exec): User: ubuntu

# null_resource.remote_exec (remote-exec): Password: false

# null_resource.remote_exec (remote-exec): Private key: true

# null_resource.remote_exec (remote-exec): Certificate: false

# null_resource.remote_exec (remote-exec): SSH Agent: true

# null_resource.remote_exec (remote-exec): Checking Host Key: false

# null_resource.remote_exec (remote-exec): Target Platform: unix

# null_resource.remote_exec (remote-exec): Connected!

# null_resource.remote_exec: Creation complete after 3s [id=someid]In order to use the above code, just use the file example and change the copy_file_on_vm null resource with this one and you are good to go.

You have to make sure that you can connect to your vm, so make sure you have a security rule that permits ssh access in your security group.

Even though I don’t recommend provisioners, keep in mind they may be a necessary evil.

Originally posted on Medium.

7. Terraform Loops and Conditionals

In this post, we will talk about how to use Count, for_each, for loops, ifs, and ternary operators inside of Terraform. It will be a long journey, but this will help a lot when writing better Terraform code.

As I am planning to use the Kubernetes provider in this lesson, you can easily create your own Kubernetes cluster using Kind. More details here.

👉 COUNT 👈

If I say I hate count, that would be an understatement. I get why people use it, but in my book, since for_each was released, I never looked back. This is my really unpopular opinion, so don’t take my word for it.

Let’s see what we can do with count. Using count we can, you guessed it, create multiple resources of the same type. Every terraform resource supports the count block. Count exposes a count.index object, which can be used in the same way you would use an iterator in any programming language.

resource "kubernetes_namespace" "this" {

count = 5

metadata {

name = format("ns%d", count.index)

}

}The above block will create 5 namespaces in Kubernetes with the following names: ns1, ns2, ns3, ns4, ns5. All fun and games until now. If you change the count to 4, the last namespace, ns5 will be deleted if you re-apply the code.

In any case, when you are using count, you can address a particular index of your resource by using type.name[index]. In our case that means individual resources can be accessed with kubernetes_namespace.this[0] to kubernetes_namespace.this[4].

Let’s suppose you want to customize, a little bit the names of the namespaces. For that, we can you use a local or a variable in conjunction with a function. Don’t worry if you see a couple of functions now, we will discuss functions in a separate article in detail.

locals {

namespaces = ["frontend", "backend", "database"]

}

resource "kubernetes_namespace" "this" {

count = length(local.namespaces)

metadata {

name = local.namespaces[count.index]

}

}The above will create three namespaces called frontend, backend, and database. Let’s suppose for whatever reason, you want to remove the backend namespace and keep only the other two.

What is going to happen when you reapply the code?

Plan: 1 to add, 0 to change, 2 to destroy.Let’s break this down. We are using a list with 3 elements, which means that our list has 3 indexes: 0, 1, and 2. If we remove the element from index 1, backend, the element from index 2, becomes the element from index 1.

To make it even more clear, initially, we have the following resources:

kubernetes_namespace.this[0] → frontend

kubernetes_namespace.this[1] → backend

kubernetes_namespace.this[2] → database

After we remove backend:

kubernetes_namespace.this[0] → frontend

kubernetes_namespace.this[1] → database

Due to the fact that database changes its terraform identity by moving from index 2 to index 1, it will be recreated, and imagine all the problems you will have when you are recreating a namespace with a ton of things inside.

Also, let’s suppose you are creating a hundred ec2 instances and you are using a list for that to better configure them. For some reason, let’s suppose you want to remove the instance with index 13 (see what I did there?), what’ going to happen with the ones from index 14 to 100? They will be recreated because all of them are going to change their index to what it was minus 1.

And that’s why I hate count. It doesn’t give you the flexibility to create very generic resources and in my book, that’s a hard pass.

👉 FOR_EACH 👈

I am a big fan of using for_each on all of the resources, as you never know when you want to create multiple resources of the same kind. For_Each can be used with map and set variables, but I don’t remember a use case in which I used a set. So what I’m always doing is using for_each on maps, and more specifically on map(object). I’ll show you what that looks like in a bit.

For_each exposes one attribute called each. This attribute contains a key and value which can be used witheach.key and each.value.

With for_each, you will reference an instance of your resource with type.name[key].

Let’s use a variable this time to create the namespaces, and let’s configure some more information for them in order to see why for_each is superior from my point of view.

resource "kubernetes_namespace" "this" {

for_each = var.namespaces

metadata {

name = each.key

annotations = each.value.annotations

labels = each.value.labels

}

}

variable "namespaces" {

type = map(object({

annotations = optional(map(string), {})

labels = optional(map(string), {})

}))

default = {

namespace1 = {}

namespace2 = {

labels = {

color = "green"

}

}

namespace3 = {

annotations = {

imageregistry = "https://hub.docker.com/"

}

}

namespace4 = {

labels = {

color = "blue"

}

annotations = {

imageregistry = "my_awesome_registry"

}

}

}

}We have defined a variable called namespaces and we are going to iterate through it on the kubernetes_namespace resource. This variable has a map(object) type and inside of it, we’ve defined two optional properties: annotations and labels.

Optional can be used on parameters inside object variables to give the possibility to omit that particular parameter and to provide a default value for it instead. As this feature is available from Terraform 1.3.0, I believe it will soon be embraced by the community as a best practice (for me, it is already). Inside this variable, we have added a default value, just for demo purposes, in the real world, you are going to anyway separate resources from variables in their own files, and you are going to provide default values as empty maps if you are using the above approach with optionals on your parameters, but that’s a completely different story.

Let’s go a little bit through our default value:

All namespaces will be our

each.keyEverything after namespaceX = will be our

each.value→ Meaning, that if we want to reference labels or annotations, we are going to useeach.value.labelsoreach.value.annotations

Due to the fact that both parameters inside of our variable have been defined with the optional block, we can omit them, meaning that namespace1 = {} is a valid configuration. This is happening because, in our resource, we’ve assigned the name in the metadata block to each.key.

The entire configuration translates into:

namespace1→ will have no labels and no annotationsnamespace2→ will have only labelsnamespace3→ will have only annotationsnamespace4→ will have both labels and annotations

If I want to remove namespace2 for whatever reason, what is going to happen to namespace3 and namespace4? Absolutely nothing. Due to the fact that we are not using a list anymore and we are using a map, by removing an element of the map, there is going to be no change to the others (remember, Terraform identifies our resources with kubernetes_namespace.this["namespace1"] to kubernetes_namespace.this["namespace4"]).

And this is why I will always vouch for for_each instead of count.

👉 Ternary Operators 👈

I’ve seen plenty of people arguing that Terraform doesn’t have an ifinstruction. Well, it does, but you can use that only when you are building complex instructions in for loops (not for_each), which I will discuss soon. For any other type of condition, you have ternary operators and the syntax is:

condition ? val1 : val2

The above means if the condition is true, use val1, if the condition is false, use val2. Let’s see it in action:

locals {

use_local_name = false

name = "namespace1"

}

resource "kubernetes_namespace" "this" {

metadata {

name = local.use_local_name ? local.name : "namespace2"

}

}In the above example, I’m checking if local.use_local_name is equal to true, and if it is, I’m going to provide to my namespace the name that is in local.name otherwise, I am going to provide it namespace2.

Of course, due to the fact that I’ve set use_local_name to false, this means that the name of my namespace will be namespace2.

The beautiful or the ugliest part of ternary operators (depending on who reads the code) is the fact that you can use nested conditionals.

Let’s build a local variable that uses nested conditionals:

locals {

val1 = 1

val2 = 2

val3 = 3

nested_conditional = local.val2 > local.val1 ? local.val3 > local.val2 ? local.val3 : local.val2 : local.val1

}In the above operation, we are checking initially if val2 is greater than val1:

if it’s not, then nested_conditional will go to the last “:” and assign the value to

local.val1if it is, then we are checking if

val3is greater thanval2and:

- ifval3is greater thanval2the value of nested _conditional will beval3

- ifval3is less thanval2the value of nested_conditional will beval2

These nested conditionals can get pretty hard to understand and usually if you see something that goes more than 3 or 4 levels, there is almost always an error in judgment somewhere or you should do some changes to the variable or expression that you are using when you are building this as it will get almost impossible to maintain in the long run.

👉 For loops and Ifs 👈

If you are familiar with Python, you are going to notice pretty easily that for loops and ifs in Terraform are pretty similar to Python’s list comprehensions.

Let me show you what I’m talking about:

locals {

list_var = range(5)

map_var = {

cat1 = {

color = "orange",

name = "Garfield"

},

cat2 = {

color = "blue",

name = "Tom"

}

}

for_list_list = [for i in local.list_var : i * 2]

for_list_map = { for i in local.list_var : format("Number_%s", i) => i }

for_map_list = [for k, v in local.map_var : k]

for_map_map = { for k, v in local.map_var : format("Cat_%s", k) => v }

for_list_list_if = [for i in local.list_var : i if i > 2]

for_map_map_if = { for k, v in local.map_var : k => v if v.color == "orange" }

}We’ve defined a list variable that generates numbers from 0 to 4 list_var and a map variable with two elements map_var. As you can see, for the other 6 locals defined in the code snippet, you can build both lists and maps by using this type of loop. By starting the value with [ you are creating a list, and by starting the value with { you are creating a map.

The difference from a syntax standpoint is that when you are building a map you have to provide the => attribute. The sky is the limit when it comes to these expressions, you can nest them on how many levels you want depending on the structure you are iterating through, but this will become, again, very hard to maintain.

If you are cycling through a map variable, and you are using a single iterator, you will actually cycle only through the values of the map, by using two, you will cycle through both keys and variables (the first iterator will be the key, the second iterator will be the value).

for_list_list = [

0,

2,

4,

6,

8,

]

for_list_map = {

"Number_0" = 0

"Number_1" = 1

"Number_2" = 2

"Number_3" = 3

"Number_4" = 4

}

for_map_list = [

"cat1",

"cat2",

]

for_map_map = {

"Cat_cat1" = {

"color" = "orange"

"name" = "Garfield"

}

"Cat_cat2" = {

"color" = "blue"

"name" = "Tom"

}

}

for_list_list_if = [

3,

4,

]

for_map_map_if = {

"cat1" = {

"color" = "orange"

"name" = "Garfield"

}

}Above are all the values of the locals defined with for loops and ifs. Let’s discuss the last two:

for_list_list_if = [for i in local.list_var : i if i > 2]

This is cycling through our initial list that holds the numbers from 0 to 4 and is creating a new list with only the elements that are greater than 2.

for_map_map_if = { for k, v in local.map_var : k => v if v.color == "orange" }

This one is cycling through our initial map variable and will recreate a new map with all the elements that have the color equal to orange.

There is another operator called splat(*) that can help with providing a more concise way to reference some common operations that you would usually do with a for. This operator works only on lists, sets, and tuples.

splat_list = [

{

name = "Mike"

age = 25

},

{

name = "Bob"

age = 29

}

]

splat_list_names = local.splat_list[*].nameIf for example, you would’ve had a list of maps in the above format, you can easily build a list of all the names or ages from it, by using the splat operator.

Originally posted on Medium here.

8. Terraform CLI Commands

Throughout my posts, I said I don’t want to repeat myself or reinvent the wheel, right?

Well, I am keeping my promise, so if you want to see all commands that you can use and even download an awesome cheatsheet with them, you can follow this article which really nails it: https://spacelift.io/blog/terraform-commands-cheat-sheet

9. Terraform Functions

Terraform functions are built-in, reusable code blocks that perform specific tasks within Terraform configurations. They make your code more dynamic and ensure your configuration is DRY. Functions allow you to perform various operations, such as converting expressions to different data types, calculating lengths, and building complex variables.

These functions are split into multiple categories:

String

Numeric

Collection

Date and Time

Crypto and Hash

Filesystem

IP Network

Encoding

Type Conversion

This split, however, can become overwhelming to someone who doesn’t have that much experience with Terraform. For example, the formatlist list function is considered to be a string function even though it modifies elements from a list. A list is a collection though; some may argue that this function should be considered a collection function, but still, at its core, it does changes to strings.

For that particular reason, I won’t specify the function type when I’ll describe them, but just go with what you can do with them. Of course, I will not go through all of the available functions, but through the ones, I am using throughout my configurations.

ToType Functions

ToType is not an actual function; rather, many functions can help you change the type of a variable to another type.

tonumber(argument) → With this function you can change a string to a number, anything else apart from another number and null will result in an error

tostring(argument) → Changes a number/bool/string/null to a string

tobool(argument) → Changes a string (only “true” or “false”)/bool/null to a bool

tolist(argument) → Changes a set to a list

toset(argument) → Changes a list to a set

tomap(argument) → Converts its argument to a map

In Terraform, you are rarely going to need to use these types of functions, but I still thought they are worth mentioning.

format(string_format, unformatted_string)

The format function is similar to the printf function in C and works by formatting a number of values according to a specification string. It can be used to build different strings that may be used in conjunction with other variables. Here is an example of how to use this function:

locals {

string1 = "str1"

string2 = "str2"

int1 = 3

apply_format = format("This is %s", local.string1)

apply_format2 = format("%s_%s_%d", local.string1, local.string2, local.int1)

}

output "apply_format" {

value = local.apply_format

}

output "apply_format2" {

value = local.apply_format2

}

# Result in:

apply_format = "This is str1"

apply_format2 = "str1_str2_3"formatlist(string_format, unformatted_list)

The formatlist function uses the same syntax as the format function but changes the elements in a list. Here is an example of how to use this function:

locals {

format_list = formatlist("Hello, %s!", ["A", "B", "C"])

}

output "format_list" {

value = local.format_list

}

# Result in:

format_list = tolist(["Hello, A!", "Hello, B!", "Hello, C!"])length(list / string / map)

Returns the length of a string, list, or map.

locals {

list_length = length([10, 20, 30])

string_length = length("abcdefghij")

}

output "lengths" {

value = format("List length is %d. String length is %d", local.list_length, local.string_length)

}

# Result in:

lengths = "List length is 3. String length is 10"join(separator, list)

Another useful function in Terraform is “join”. This function creates a string by concatenating together all elements of a list and a separator. For example, consider the following code:

locals {

join_string = join(",", ["a", "b", "c"])

}

output "join_string" {

value = local.join_string

}

# Result in:

The output of this code will be “a, b, c”.try(value, fallback)

Sometimes, you may want to use a value if it is usable, but fall back to another value if the first one is unusable. This can be achieved using the “try” function. For example:

locals {

map_var = {

test = "this"

}

try1 = try(local.map_var.test2, "fallback")

}

output "try1" {

value = local.try1

}

# Result:

The output of this code will be “fallback”, as the expression local.map_var.test2 is unusable.can(expression)

A useful function for validating variables is “can”. It evaluates an expression and returns a boolean indicating if there is a problem with the expression. For example:

variable "a" {

type = any

validation {

condition = can(tonumber(var.a))

error_message = format("This is not a number: %v", var.a)

}

default = "1"

}

# Result:

The validation in this code will give you an error: “This is not a number: 1”.flatten(list)

In Terraform, you may work with complex data types to manage your infrastructure. In these cases, you may want to flatten a list of lists into a single list. This can be achieved using the “flatten” function, as in this example:

locals {

unflatten_list = [[1, 2, 3], [4, 5], [6]]

flatten_list = flatten(local.unflatten_list)

}

output "flatten_list" {

value = local.flatten_list

}

# Result:

The output of this code will be [1, 2, 3, 4, 5, 6].keys(map) & values(map)

It may be useful to extract the keys or values from a map as a list. This can be achieved using the “keys” or “values” functions, respectively. For example:

locals {

key_value_map = {

"key1" : "value1",

"key2" : "value2"

}

key_list = keys(local.key_value_map)

value_list = values(local.key_value_map)

}

output "key_list" {

value = local.key_list

}

output "value_list" {

value = local.value_list

}

# Result:

key_list = ["key1", "key2"]

value_list = ["value1", "value2"]slice(list, startindex, endindex)

Slice returns consecutive elements from a list from a startindex (inclusive) to an endindex (exclusive).

locals {

slice_list = slice([1, 2, 3, 4], 2, 4)

}

output "slice_list" {

value = local.slice_list

}

# Result:

slice_list = [3]range

Creates a range of numbers:

one argument(limit)

two arguments(initial_value, limit)

three arguments(initial_value, limit, step)

locals {

range_one_arg = range(3)

range_two_args = range(1, 3)

range_three_args = range(1, 13, 3)

}

output "ranges" {

value = format("Range one arg: %v. Range two args: %v. Range three args: %v", local.range_one_arg, local.range_two_args, local.range_three_args)

}

# Result:

range = "Range one arg: [0, 1, 2]. Range two args: [1, 2]. Range three args: [1, 4, 7, 10]"lookup(map, key, fallback_value)

Retrieves a value from a map using its key. If the value is not found, it will return the default value instead

locals {

a_map = {

"key1" : "value1",

"key2" : "value2"

}

lookup_in_a_map = lookup(local.a_map, "key1", "test")

}

output "lookup_in_a_map" {

value = local.lookup_in_a_map

}

# Result:

This will return: lookup_in_a_map = "key1"concat(lists)

Takes two or more lists and combines them in a single one

locals {

concat_list = concat([1, 2, 3], [4, 5, 6])

}

output "concat_list" {

value = local.concat_list

}

# Result:

concat_list = [1, 2, 3, 4, 5, 6]merge(maps)

The merge function takes one or more maps and returns a single map that contains all of the elements from the input maps. The function can also take objects as input, but the output will always be a map.

Let’s take a look at an example:

locals {

b_map = {

"key1" : "value1",

"key2" : "value2"

}

c_map = {

"key3" : "value3",

"key4" : "value4"

}

final_map = merge(local.b_map, local.c_map)

}

output "final_map" {

value = local.final_map

}

# Result:

final_map = {

"key1" = "value1"

"key2" = "value2"

"key3" = "value3"

"key4" = "value4"

}zipmap(key_list, value_list)

Constructs a map from a list of keys and a list of values

locals {

key_zip = ["a", "b", "c"]

values_zip = [1, 2, 3]

zip_map = zipmap(local.key_zip, local.values_zip)

}

output "zip_map" {

value = local.zip_map

}

# Result

zip_map = {

"a" = 1

"b" = 2

"c" = 3

}expanding function argument …

This special argument works only in function calls and expands a list into separate arguments. Useful when you want to merge all maps from a list of maps

locals {

list_of_maps = [

{

"a" : "a"

"d" : "d"

},

{

"b" : "b"

"e" : "e"

},

{

"c" : "c"

"f" : "f"

},

]

expanding_map = merge(local.list_of_maps...)

}

output "expanding_map" {

value = local.expanding_map

}

# Result

expanding_map = {

"a" = "a"

"b" = "b"

"c" = "c"

"d" = "d"

"e" = "e"

"f" = "f"

}file(path_to_file)

Reads the content of a file as a string and can be used in conjunction with other functions like jsondecode / yamldecode.

locals {

a_file = file("./a_file.txt")

}

output "a_file" {

value = local.a_file

}

# Result

The output would be the content of the file called a_file as a string.templatefile(path, vars)

Reads the file from the specified path and changes the variables specified in the file between the interpolation syntax ${ … } with the ones from the vars map.

locals {

a_template_file = templatefile("./file.yaml", { "change_me" : "awesome_value" })

}

output "a_template_file" {

value = local.a_template_file

}

# Result

This will change the ${change_me} variable to awesome_value.jsondecode(string)

Interprets a string as json.

locals {

a_jsondecode = jsondecode("{\"hello\": \"world\"}")

}

output "a_jsondecode" {

value = local.a_jsondecode

}

# Result

jsondecode = {

"hello" = "world"

}jsonencode(string)

Encodes a value to a string using json

locals {

a_jsonencode = jsonencode({ "hello" = "world" })

}

output "a_jsonencode" {

value = local.a_jsonencode

}

# Result

a_jsonencode = "{\"hello\":\"world\"}"yamldecode(string)

Parses a string as a subset of YAML, and produces a representation of its value.

locals {

a_yamldecode = yamldecode("hello: world")

}

output "a_yamldecode" {

value = local.a_yamldecode

}

# Result:

a_yamldecode = {

"hello" = "world"

}yamlencode(value)

Encodes a given value to a string using YAML.

locals {

a_yamlencode = yamlencode({ "a" : "b", "c" : "d" })

}

output "a_yamlencode" {

value = local.a_yamlencode

}

# Result:

a_yamlencode = <<EOT

"a": "b"

"c": "d"

EOTYou can use Terraform functions to make your life easier and to write better code. Keeping your configuration as DRY as possible improves readability and makes updating it easier, so functions are a must-have in your configurations.

These are just the functions that I am using the most, but some honorable mentions are element, base64encode, base64decode, formatdate, uuid, and distinct.

Originally posted on Medium here.

10. Working with files

When it comes to Terraform, working with files is pretty straightforward. Apart from the .tf and .tfvars files, you can use json, yaml, or whatever other file types that support your needs.

In the last article, I mentioned a couple of functions that interact with files, like file & template_file, and right now I want to show you how easy it is to interact with a lot of files and use some of their content as variables for your configuration.

For the following examples, let’s suppose we are using this yaml file:

namespaces:

ns1:

annotations:

imageregistry: "https://hub.docker.com/"

labels:

color: "green"

size: "big"

ns2:

labels:

color: "red"

size: "small"

ns3:

annotations:

imageregistry: "https://hub.docker.com/"Reading a file only if it exists

locals {

my_file = fileexists("./my_file.yaml") ? file("./my_file.yaml") : null

}

output "my_file" {

value = local.my_file

}We are using two functions for this and a ternary operator. The fileexistsfunction checks if the file exists and returns true or false based on the result. In our case above, if the file exists, my_file will be the content of my_file.yaml as a string, otherwise will be null.

Let’s take this up a notch and transform this into a real use case.

Reading the yaml file and using it in a configuration if it exists

locals {

namespaces = fileexists("./my_file.yaml") ? yamldecode(file("./my_file.yaml")).namespaces : var.namespaces

}

output "my_file" {

value = local.namespaces

}

resource "kubernetes_namespace" "this" {

for_each = local.namespaces

metadata {

name = each.key

annotations = lookup(each.value, "annotations", {})

labels = lookup(each.value, "labels", {})

}

}

variable "namespaces" {

type = map(object({

annotations = optional(map(string), {})

labels = optional(map(string), {})

}))

default = {

ns1 = {}

ns2 = {}

ns3 = {}

}

}In the example above, we are checking if the file exists, and if it does we are going to load the namespaces from the yaml file, otherwise use a variable for that. As the file exists, we are using yamldecode on the loaded string to create a map variable and we are passing it to for_each. This can be extremely useful in some use cases.

Example Terraform plan:

# kubernetes_namespace.this["ns1"] will be created

+ resource "kubernetes_namespace" "this" {

+ id = (known after apply)

+ metadata {

+ annotations = {

+ "imageregistry" = "https://hub.docker.com/"

}

+ generation = (known after apply)

+ labels = {

+ "color" = "green"

+ "size" = "big"

}

+ name = "ns1"

+ resource_version = (known after apply)

+ uid = (known after apply)

}

}

# kubernetes_namespace.this["ns2"] will be created

+ resource "kubernetes_namespace" "this" {

+ id = (known after apply)

+ metadata {

+ generation = (known after apply)

+ labels = {

+ "color" = "red"

+ "size" = "small"

}

+ name = "ns2"

+ resource_version = (known after apply)

+ uid = (known after apply)

}

}

# kubernetes_namespace.this["ns3"] will be created

+ resource "kubernetes_namespace" "this" {

+ id = (known after apply)

+ metadata {

+ annotations = {

+ "imageregistry" = "https://hub.docker.com/"

}

+ generation = (known after apply)

+ name = "ns3"

+ resource_version = (known after apply)

+ uid = (known after apply)

}

}Using templatefile on a yaml file and use its configuration if it exists

We can go further on this example and add some variables that we will change with templatefile. To do that, we must first make some changes to our initial yaml file.

namespaces:

ns1:

annotations:

imageregistry: ${image_registry_ns1}

labels:

color: "green"

size: "big"

ns2:

labels:

color: ${color_ns2}

size: "small"

ns3:

annotations:

imageregistry: "https://hub.docker.com/"The only change that we will have to do for the above code will be a change to the locals:

locals {

namespaces = fileexists("./my_file.yaml") ? yamldecode(templatefile("./my_file.yaml", { image_registry_ns1 = "ghcr.io", color_ns2 = "black" })).namespaces : var.namespaces

}Those two variables defined inside the yaml file using the ${} syntax, will be changed with the ones from the templatefile functions.

Fileset

The fileset function helps with identifying all files inside a directory that respect a pattern.

locals {

yaml_files = fileset(".", "*.yaml")

}The above will show all the yaml files, inside the current directory. This output will be a list of all those files. Not very useful on its own, right?

Well, you can use the file function to load the content of these files as strings. You can take them one by one, using list indexes, but if you want to take it up a notch, what you can do is use a for loop and group them together in something that makes sense.

Let’s suppose you have 2 other yaml files that are similar to the one we used at the beginning of the post. We can group them all together in a single variable:

locals {

namespaces = merge([for my_file in fileset(".", "*.yaml") : yamldecode(file(my_file))["namespaces"]]...)

}

output "namespaces" {

value = local.namespaces

}As the files are exactly the same, only the names of the namespaces are different, this will result in:

namespaces = {

"ns1" = {

"annotations" = {

"imageregistry" = "https://hub.docker.com/"

}

"labels" = {

"color" = "green"

"size" = "big"

}

}

"ns2" = {

"labels" = {

"color" = "red"

"size" = "small"

}

}

"ns3" = {

"annotations" = {

"imageregistry" = "https://hub.docker.com/"

}

}

"ns4" = {

"annotations" = {

"imageregistry" = "https://hub.docker.com/"

}

"labels" = {

"color" = "green"

"size" = "big"

}

}

"ns5" = {

"labels" = {

"color" = "red"

"size" = "small"

}

}

"ns6" = {

"annotations" = {

"imageregistry" = "https://hub.docker.com/"

}

}

"ns7" = {

"annotations" = {

"imageregistry" = "https://hub.docker.com/"

}

"labels" = {

"color" = "green"

"size" = "big"

}

}

"ns8" = {

"labels" = {

"color" = "red"

"size" = "small"

}

}

"ns9" = {

"annotations" = {

"imageregistry" = "https://hub.docker.com/"

}

}

}You can check another cool example here.

Other Examples

You can get filesystem-related information using these key expressions:

path.module — This function returns the path of the current module being executed. This is useful for accessing files or directories that are relative to the module being executed.

path.root — This function returns the root directory of the current Terraform project. This is useful for accessing files or directories located at the project's root.

path.cwd — This function returns the current working directory where Terraform is being executed before any chdir operations happened. This is useful for accessing files or directories that are relative to the directory where Terraform is running from.

There are some other file functions that can be leveraged in order to accommodate some use cases, but to be honest I’ve used them only once or twice.

Still, I believe mentioning them, will bring some value.

basename — takes a path and returns everything apart from the last part of it

E.G: basename("/Users/user1/hello.txt") will return hello.txt.

dirname — behaves exactly opposite to basename, returns all the directories until the file

E.G: dirname("/Users/user1/hello.txt") will return /Users/user1

pathexpand — takes a path that starts with a ~ and expands this path adding the home of the logged in user. If the path, doesn’t use a ~ this function will not do anything

E.G: You are logged in as user1 on a Mac: pathexpand("~/hello.txt") will return /Users/user1/hello.txt

filebase64 — reads the content of a file and returns it as base64 encoded text.

abspath — takes a string containing a filesystem path and returns the absolute path

Originally posted on Medium here.

11. Understanding Terraform State

Terraform state is a critical component of Terraform that enables users to define, provision, and manage infrastructure resources using declarative code. In this blog post, we will explore the importance of Terraform state, how it works, and best practices for managing it.

It is a json file that tracks the state of infrastructure resources managed by Terraform. By default, the name of the file is terraform.tfstate and whenever you update the first state, a backup is generated called terraform.tfstate.backup.

This state file is stored locally by default, but can also be stored remotely using a remote backend such as Amazon S3, Azure Blob Storage, Google Cloud Storage, or HashiCorp Consul. The Terraform state file includes the current configuration of resources, their dependencies, and metadata such as resource IDs and resource types. There are a couple of products that help with managing state and provide a sophisticated workflow around Terraform like Spacelift or Terraform Cloud.

How does it work?

When Terraform is executed, it reads the configuration files and the current state file to determine the changes required to bring the infrastructure to the desired state. Terraform then creates an execution plan that outlines the changes to be made to the infrastructure. If the plan is accepted, Terraform applies the changes to the infrastructure and updates the state file with the new state of the resources.

You can use the terraform state command to manage your state.

terraform state list: This command lists all the resources that are currently tracked by Terraform state.terraform state show: This command displays the details of a specific resource in the Terraform state. The output includes all the attributes of the resource.terraform state pull: This command retrieves the current Terraform state from a remote backend and saves it to a local file. This command is useful when you want to make manual operations in a remote state.terraform state push: This command uploads the local Terraform state file to the remote backend. This command is useful after you made manual changes to your remote state.terraform state rm: This command removes a resource from the Terraform state. This doesn’t mean the resource will be destroyed, it won’t be managed by Terraform after you’ve removed it.terraform state mv: This command renames a resource in the Terraform state.terraform state replace-provider: This command replaces the provider configuration for a specific resource in the Terraform state. This command is useful when switching from one provider to another or upgrading to a new provider version.

Supported backends

Amazon S3 Backend